What are the Best Practices for Creating a Backup Copy Job?

Follow the recommendations below:

- Consider that by default Backup Copy Job will not copy the restore point from and to the same repository - this operation has no sense for DR.

- Give Backup Copy Job sufficient time interval to copy the data. This is especially important for Backup Copy Jobs working over slow networks.

- Also, be sure that the creation of restore point from source job does not last until the end of the copy interval. Otherwise, Backup Copy Job will not be able to copy all the data and will finish with warning.

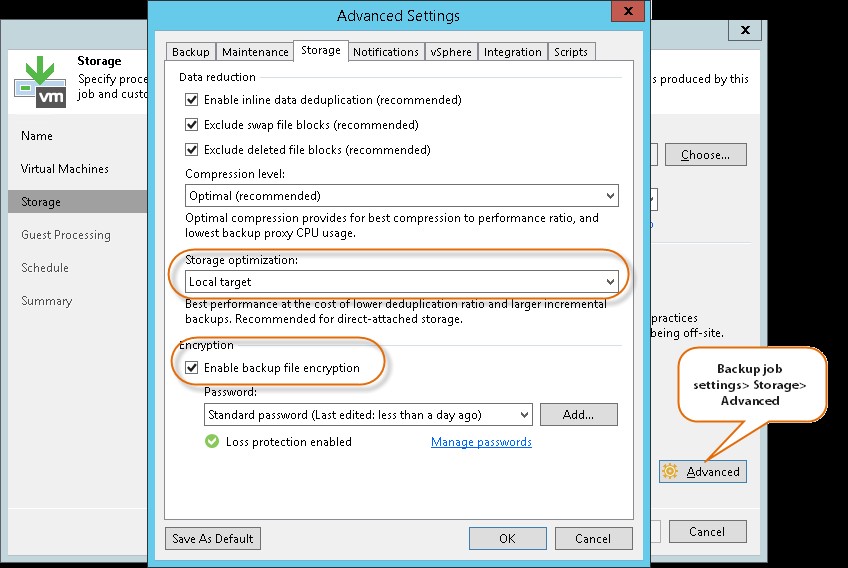

- Backup Copy Job detects the block size of the first source backup point it processes. If later block size of the source job changes (is modified by user changing Storage optimization parameter), then BCJ won’t be able to copy such points. Thus, you will have to either change the block size on source, or run active full on Backup Copy Job. This also refers to a situation when you enable encryption for the source job.

- Even though Backup Copy Job does not put any load on the VM in production, it requests some information from vSphere. Thus, a Backup Copy Job will not be able to copy a VM that has been deleted from vSphere.

- Make sure that source jobs generate a valid restore point for every copy interval, otherwise the job will end in warning, e.g. do not set copy interval to 1 day if source job creates a new point once a week.

- If you enable the “Read the entire point…” option, set the GFS points to be created regularly (preferably, weekly). In this case, Backup Copy Job switches to forward incremental mode and retention policy can be applied only when new full point appears. For example, if you set GFS to be created only yearly, the retention for incremental points will be hardly every applied.